· Vision AI · 2 min read

Edge AI in Industry: YOLOv11, Hailo-8, and the End of Defects

Computer vision is no longer just for labs. Learn to implement real-time defect detection (100+ FPS) integrated with your PLC.

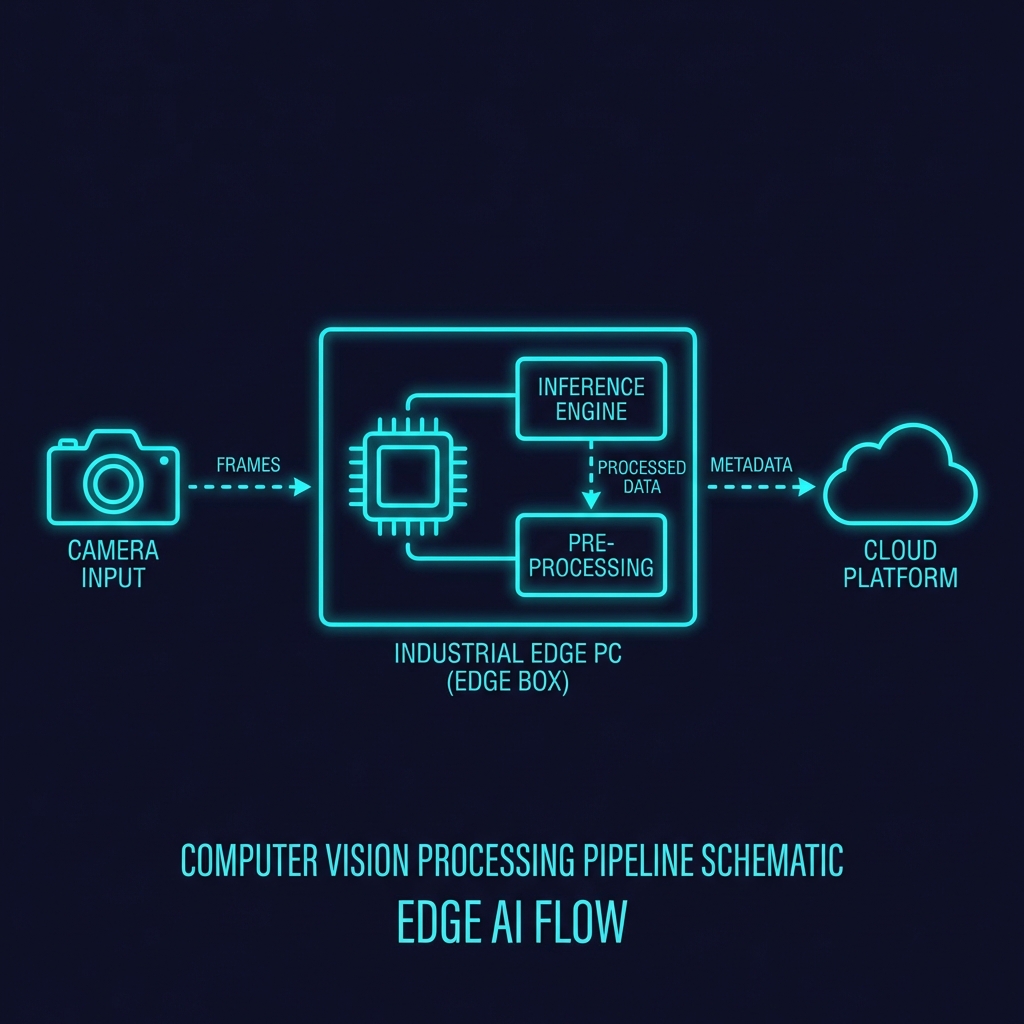

Detecting a defect on a production line moving at 3 meters per second requires more than a simple camera. It requires ultra-low latency Edge AI.

In this “Capstone post,” we tie together everything we’ve learned: industrial protocols, hardened hardware, and state-of-the-art artificial intelligence to create an automated quality inspection system.

1. The Tech Stack: YOLOv11 + Hailo-8

To achieve 100+ FPS on a Raspberry Pi 5, you can’t use the CPU. You need an NPU (Neural Processing Unit).

- YOLOv11: The latest model from Ultralytics, optimized for detecting small objects (like cracks or stains) with high precision.

- Hailo-8: A 26 TOPS accelerator that processes the model in milliseconds without heating up the CPU.

2. Closing the Loop: From Inference to Action

An AI model that only shows a red box on a screen is useless in a factory. The real value is in stopping the machine or ejecting the defective part.

The Workflow

- Capture: HQ camera capturing at 120 FPS.

- Inference: The Hailo-8 processes the frame and confirms a defect with >90% confidence.

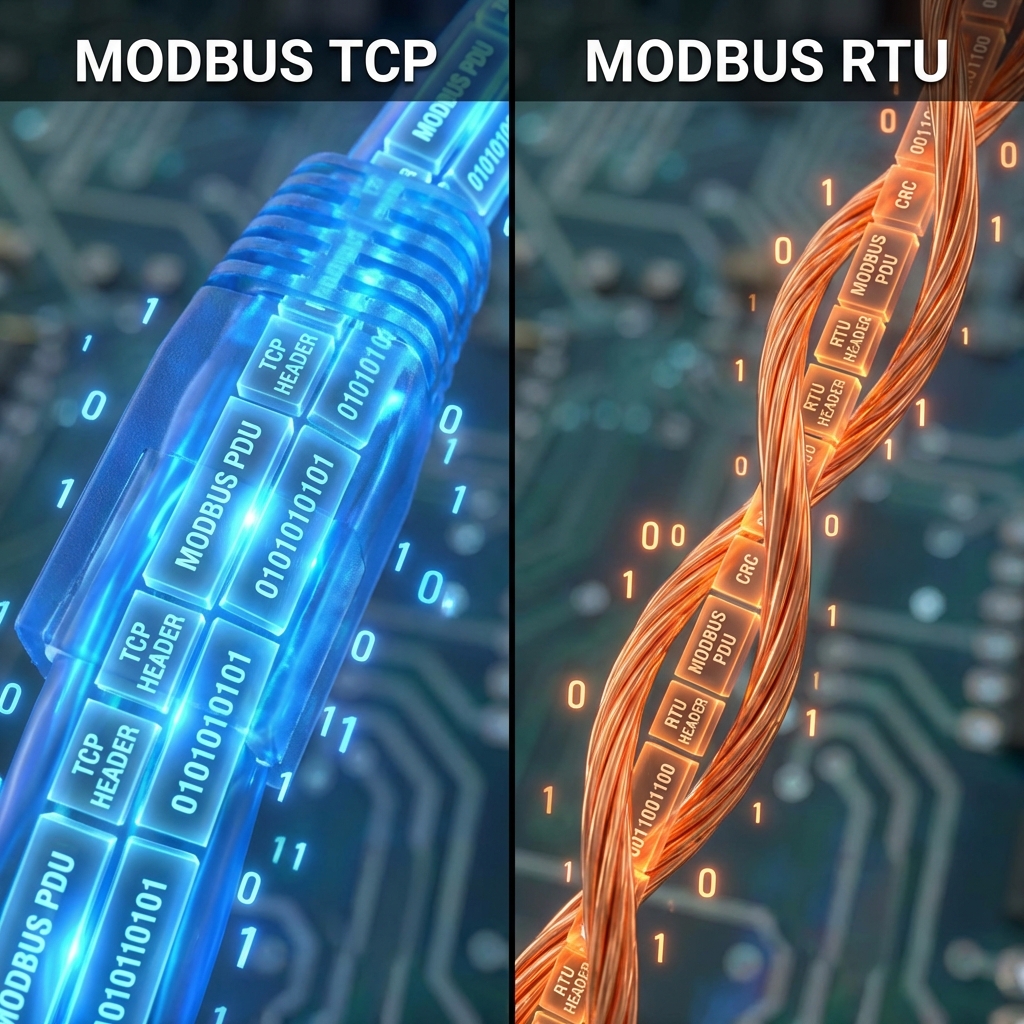

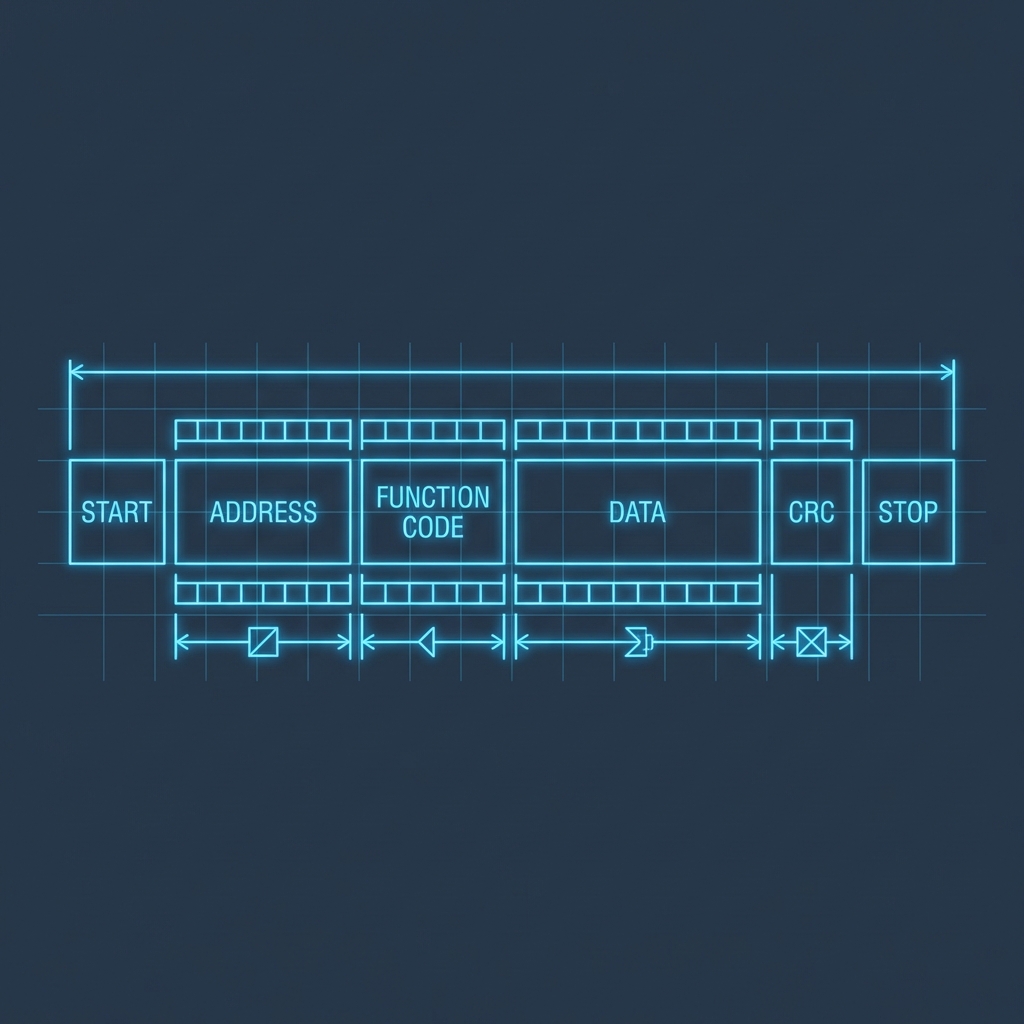

- Action: The Python script sends a Modbus TCP command to the PLC to trigger a pneumatic blower on register 100.

👉 Full Code: edge-ai-inspection

Integrated Logic Example

if results.defect_detected:

# Write to PLC Coil 100 (Rejector)

plc.write_coil(100, True)3. Docker Deployment

On the plant floor, you don’t install libraries by hand. You use containers. This ensures that the versions of the Hailo drivers and OpenCV are identical in the lab and on the assembly line.

4. Critical Latency: Jitter is the Enemy

If the system takes 500ms to process, by the time the PLC receives the command, the part has already flown by.

- Target Latency: <20ms from the time light hits the sensor to the time air leaves the nozzle.

- Optimization: Use GStreamer for the video pipeline, avoiding the overhead of OpenCV’s frame queue.

Conclusion

Artificial Intelligence is the new industrial sensor. By integrating YOLOv11 with standard protocols like Modbus, we turn a camera into an inspector that never tires and never blinks.

Technical Sources:

- Ultralytics: YOLOv11 Documentation

- Hailo: Hailo-8 Edge AI Processor Overview

- IEEE: Real-time Vision Systems in Industrial Automation